Introduction to Multimodal Deep Learning in Breast Cancer Classification

Overview of Breast Cancer Diagnosis Challenges

Breast cancer remains one of the most prevalent types of cancer worldwide, affecting millions of women every year. According to recent statistics, breast cancer diagnoses are increasing, which highlights the pressing need for improved detection and treatment strategies. Early diagnosis plays a critical role in enhancing survival rates, but it comes with its own set of challenges. Accurate detection in the early stages can be difficult due to the variability in tumor presentation across individuals. Factors such as tumor size, location, and molecular subtype contribute to the complexity of diagnosis.

The traditional methods, such as mammograms and biopsies, have been effective but often lack precision, particularly when dealing with heterogeneous breast cancer types. These methods are prone to false positives or false negatives, which can delay crucial treatment. Furthermore, advanced cases of breast cancer are harder to detect early, complicating treatment options and decreasing the chances of survival. In light of these challenges, multimodal deep learning is emerging as a revolutionary approach in the fight against breast cancer.

The Role of Multimodal Deep Learning in Cancer Detection

Multimodal deep learning (DL) has taken center stage in breast cancer research as a powerful tool to improve diagnostic accuracy. By integrating various data sources, such as imaging, clinical records, and genetic information, multimodal DL provides a more comprehensive understanding of each patient’s unique cancer profile.

What is Multimodal Deep Learning?

In simple terms, multimodal deep learning refers to combining different data types, or modalities, to make predictions. In breast cancer classification, this can include:

-

- Imaging data: Mammography, MRI, ultrasound, and histopathology images.

- Clinical data: Patient demographics, medical history, and treatment records.

- Genomic data: Genetic markers, mutations, and other molecular profiles.

These different data sources are fused together in a deep learning model to analyze patterns, correlations, and outcomes that a single modality alone might miss.

Why Is Multimodal DL Gaining Traction?

Multimodal DL is rapidly gaining popularity because it addresses the limitations of traditional methods. Breast cancer, like many other complex diseases, cannot be fully understood by looking at just one type of data. Imaging alone might show tumor growth, but when combined with clinical and genetic data, the model can predict not just the presence of cancer, but also its aggressiveness, probable recurrence, and response to treatment. The integration of multiple modalities makes it possible to generate deeper insights into how the disease behaves and how it can be treated effectively.

Key Advantages of Multimodal DL in Breast Cancer

- Enhanced diagnostic accuracy: Combining data leads to better classification results.

- Personalized treatment predictions: Tailors treatment plans to the unique genetic and clinical makeup of the patient.

- Improved early detection: Detects cancer earlier by analyzing multiple data points.

By providing a holistic view of the patient, multimodal DL helps doctors make more informed decisions, leading to better outcomes for breast cancer patients.

This post aims to serve as a comprehensive guide on how multimodal deep learning techniques enhance breast cancer classification. The article will cover key strategies for integrating various data types, discuss the challenges and opportunities within the field, and provide insights into the latest trends. Whether you’re a healthcare professional, researcher, or technologist, this guide will offer valuable information on the cutting-edge techniques shaping breast cancer detection today.

Up next, we’ll explore the key approaches to multimodal fusion in deep learning, highlighting different fusion strategies like decision fusion, feature fusion, and hybrid fusion, each offering unique strengths and use cases in breast cancer classification. By understanding these approaches, you’ll gain a deeper insight into how different modalities are combined to create more accurate and efficient models.

Stay tuned as we delve into these multimodal fusion strategies in the next section.

Key Approaches to Multimodal Fusion in Deep Learning

Decision Fusion: A Comprehensive Overview

In the context of multimodal deep learning for breast cancer classification, decision fusion stands out as one of the foundational approaches. Decision fusion involves combining the outputs of multiple models, each working with different data modalities, to arrive at a final prediction. Instead of fusing raw data or features, decision fusion aggregates the predictions made by individual models, which are then combined to make a final decision.

Key Advantages of Decision Fusion:

- Handling Missing Data: One of the most significant benefits of decision fusion is its ability to handle missing or incomplete data. Since the models work independently on different modalities, the absence of data from one modality doesn’t affect the overall prediction.

- Reducing Dimensionality Issues: Decision fusion simplifies the model by operating on a smaller set of predictions rather than a high-dimensional feature space. This makes it particularly effective when dealing with heterogeneous data types, such as imaging, clinical records, and genomics.

- Increased Flexibility: Decision fusion allows for flexibility in using different models optimized for specific data types. This means that specialized models can be deployed for imaging, clinical, or genetic data, improving the performance for each modality before combining them for an overall decision.

Limitations of Decision Fusion:

- Neglecting Low-Level Interactions: One drawback of decision fusion is that it doesn’t account for low-level interactions between data modalities. For example, the subtle interplay between genetic mutations and radiological findings may not be fully captured if predictions are made independently before combining.

- Potential for Overfitting: While decision fusion can improve overall accuracy, there’s a risk that it may rely too heavily on the strengths or weaknesses of individual models. If one model dominates the final prediction, the ensemble’s diversity might be diminished, reducing the overall robustness.

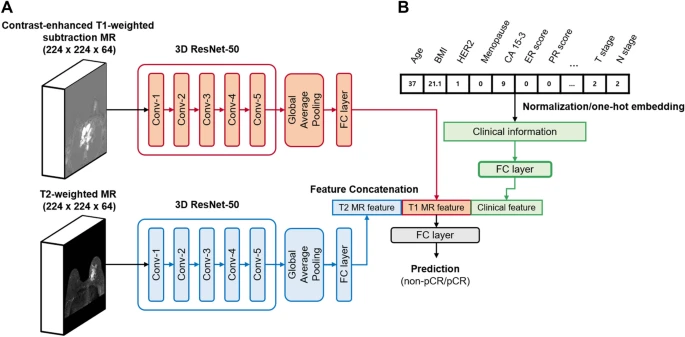

Feature Fusion: Leveraging the Power of Combined Data

Feature fusion is another widely-used method in multimodal deep learning that aims to combine different data types at the feature level. This approach extracts features from each data modality and fuses them before feeding them into a model for prediction. Unlike decision fusion, feature fusion emphasizes learning shared representations, allowing the model to understand correlations and patterns across multiple modalities simultaneously.

Advantages of Feature Fusion

- Learning Shared Representations: By fusing features from different data types, feature fusion enables models to identify shared patterns across modalities, which can significantly enhance predictive power. For example, fusing MRI images with clinical data allows for a more holistic understanding of a patient’s condition.

- Increased Granularity: Since feature fusion works at the raw data or feature extraction level, it can uncover relationships that decision fusion might miss. This provides greater granularity, allowing models to detect subtle nuances that may affect breast cancer prognosis or treatment outcomes.

Challenges with Feature Fusion

- Dimensionality Issues: One of the biggest challenges with feature fusion is managing the high-dimensional feature space. Combining multiple data sources results in an extensive feature set, which can lead to overfitting or increased computational complexity.

- Heterogeneous Data Formats: Fusing different types of data, such as 1D clinical data with 3D imaging data, presents technical challenges. Proper normalization and pre-processing techniques are essential to ensure that each modality contributes effectively to the final model.

Hybrid Fusion: Combining the Best of Both Worlds

Hybrid fusion brings together the strengths of both decision fusion and feature fusion by applying feature fusion to certain data types and decision fusion to others. This approach allows for a more comprehensive model that captures both low-level feature interactions and high-level decision-making processes.

Key Benefits of Hybrid Fusion

- Mitigating Dimensionality Challenges: Hybrid fusion reduces the curse of dimensionality by only applying feature fusion where it is most effective, while using decision fusion for other modalities. This selective fusion approach helps prevent the model from becoming overwhelmed by high-dimensional data, especially when working with large datasets like MRI scans or genomic data.

- Minimizing Data Loss: One of the primary issues with decision fusion is that it doesn’t capture intricate relationships between data points. Hybrid fusion resolves this by leveraging feature fusion where necessary, preserving more detailed information while benefiting from the flexibility of decision fusion.

Challenges of Hybrid Fusion

- Increased Model Complexity: Hybrid fusion adds an extra layer of complexity to the overall model, as different fusion strategies need to be implemented for different modalities. Balancing decision and feature fusion requires sophisticated model architecture design and careful tuning to ensure optimal performance.

Key Take-Aways

- Decision Fusion excels at handling missing data and reducing dimensionality issues but may neglect low-level interactions between data types.

- Feature Fusion enables the model to learn shared representations, providing more granular insights but comes with challenges in managing high-dimensional data and heterogeneous formats.

- Hybrid Fusion offers the best of both worlds, combining feature and decision fusion to enhance model accuracy while managing complexity and data loss.

As we explore multimodal deep learning further, it’s essential to understand how various data modalities contribute to improved breast cancer classification. By integrating imaging, clinical, and genomic data, researchers are able to gain a more holistic view of patient profiles, leading to more personalized and accurate cancer predictions.

Practical Applications and Real-world Implementations of Multimodal Deep Learning in Breast Cancer Classification

Real-World Use Cases in Breast Cancer Classification

Multimodal deep learning (DL) is no longer confined to the realm of academic research; it has made substantial strides in clinical settings. By integrating diverse data types such as clinical records, imaging, and genomics, multimodal DL models have revolutionized breast cancer diagnosis and prognosis. This section explores real-world examples of how these advanced models are transforming breast cancer classification in hospitals, research facilities, and treatment planning.

Hospitals and Diagnostic Centers

In hospital settings, multimodal DL models are used to improve early detection of breast cancer, which is crucial for patient survival. For instance, some healthcare facilities have begun integrating mammography data with genomic profiles and clinical information to enhance the accuracy of diagnosis. These models aid radiologists by providing a second layer of validation, flagging potential malignancies that might be missed by traditional methods.

Key benefits

- Enhanced early detection: Combining multiple data sources allows for higher precision in identifying cancerous cells early on.

- Reduced false positives: Advanced models can differentiate between benign and malignant tumors more effectively, reducing unnecessary biopsies.

Research Facilities and Clinical Trials

Research facilities leverage multimodal DL to conduct advanced studies that go beyond the capabilities of single-modality models. Clinical trials have seen the use of these models to predict patient responses to neoadjuvant chemotherapy by analyzing a combination of MRI data, histopathology images, and genetic markers. By predicting how a patient might respond to specific treatments, healthcare providers can personalize therapies, improving patient outcomes.

Key impact:

- Personalized treatment plans: Models can identify the most effective treatment pathways for patients based on comprehensive data sets.

- Improved clinical trial accuracy: Multimodal models are providing more robust data, leading to better trial results and faster drug approvals.

Case Studies: Multimodal DL Success Stories

Several case studies highlight the efficacy of multimodal deep learning in breast cancer classification. For example, a hospital in the U.S. utilized a multimodal model that combined ultrasound, mammography, and genomic data to assess patients with dense breast tissue—cases where traditional mammograms might miss abnormalities. The integration of these modalities resulted in a 20% increase in diagnostic accuracy.

Another research institution in Europe integrated whole-slide imaging with clinical records and RNA sequencing data to classify breast cancer subtypes. The model improved subtype classification by over 15%, allowing for more tailored treatment plans based on the specific molecular characteristics of each cancer subtype.

Best Practices for Implementing Multimodal Deep Learning in Healthcare

While the potential for multimodal deep learning in healthcare is undeniable, successful implementation requires a thoughtful approach. Here are some best practices for integrating these models into real-world healthcare environments.

Combining Modalities Effectively

A key challenge in multimodal deep learning is ensuring that different data types—such as imaging, clinical records, and genomic data—are properly combined. To achieve optimal results, it is important to select the appropriate fusion strategy based on the specific use case.

- Feature fusion: Ideal for cases where high-level data representation is required. This approach combines the features from different modalities into a single vector, enhancing the model’s ability to learn complex relationships.

- Decision fusion: Useful when different modalities might provide independent insights. Each modality is processed separately, and their outputs are combined at the decision level.

Ensuring Robust Model Interpretability

One of the biggest barriers to adoption in healthcare is the interpretability of AI models. Healthcare professionals need to trust the model’s decisions, which requires transparency in how the model reaches its conclusions. Techniques such as SHAP (SHapley Additive exPlanations) or Grad-CAM (Gradient-weighted Class Activation Mapping) can help make models more interpretable, allowing clinicians to understand which features led to specific predictions.

Key tips for interpretability

- Use explainable AI (XAI) techniques to enhance trust.

- Provide visual outputs that highlight which areas of an image or which data points contributed to the decision-making process.

Utilizing Public Datasets for Research Advancement

To advance breast cancer research, the availability of public datasets is critical. Researchers and developers should leverage existing datasets like The Cancer Genome Atlas (TCGA) and The Breast Cancer Digital Repository (BCDR) to train multimodal DL models. These datasets provide a rich source of information, combining clinical, imaging, and genomic data, which can be used to build more robust and accurate models.

- The Cancer Genome Atlas (TCGA): A comprehensive dataset of cancer genomic profiles, offering invaluable insights for model training.

- BCDR: A repository that includes mammographic images and clinical data, essential for developing models focused on breast cancer detection and classification.

Conclusion: Multimodal Deep Learning in Breast Cancer Classification

As we’ve explored in this comprehensive guide, multimodal deep learning is set to revolutionize the landscape of breast cancer classification. From enhancing diagnostic accuracy in hospital settings to transforming research in clinical trials, multimodal DL models provide a powerful tool for healthcare professionals.

By integrating various data modalities—clinical data, imaging data, and genomic data—multimodal DL models offer deeper insights and more accurate predictions than single-modality approaches. The fusion of these data types allows for more precise early detection, personalized treatment plans, and better outcomes for patients.

In terms of approaches, the combination of decision fusion, feature fusion, and hybrid fusion provides flexibility depending on the specific application, ensuring optimal performance. Despite the challenges in data integration and the curse of dimensionality, emerging trends such as ensemble learning and transfer learning offer promising future directions to overcome these hurdles.

Looking ahead, the practical applications of multimodal DL will continue to expand, with real-world use cases providing ongoing validation of its effectiveness. Hospitals, research facilities, and diagnostic centers are already seeing the benefits, and with best practices in place, the adoption of these models will only grow.

The journey of multimodal deep learning in healthcare is just beginning, but its potential is limitless. By embracing AI-driven multimodal approaches, the medical community can look forward to a future where breast cancer classification is not only more accurate but also more personalized and accessible.

If you’re a researcher, healthcare professional, or developer, now is the time to explore how multimodal deep learning can be implemented in your work. The fusion of data types holds the key to transforming patient care and accelerating medical breakthroughs. Don’t wait—start integrating multimodal DL models into your workflows and be part of the future of breast cancer diagnosis and treatment.

Explore the full [Paper] for in-depth insights. All recognition and appreciation go to the brilliant researchers behind this project. If you enjoyed reading, make sure to connect with us on [Twitter, Facebook, and LinkedIn] for more insightful content. Stay updated with our latest posts and join our growing community. Your support means a lot to us, so don’t miss out on the latest updates!