Introduction to Form Understanding in Scanned Documents

In today’s digital age, the digitization of physical documents has revolutionized how we store, process, and analyze information. From historical records to daily business transactions, everything is transitioning from paper-based to digital formats. This shift brings immense benefits, such as more efficient storage, easy access, and the ability to process large volumes of data quickly. However, the challenge lies in understanding and extracting valuable information from scanned documents, which often include forms, invoices, and receipts.

Understanding these documents requires more than just recognizing text. It demands a deep understanding of their layout, structure, and the relationships between various elements. This task, known as form understanding, is a critical area within document analysis and plays a pivotal role in many industries, including finance, healthcare, and government services.

The Growing Impact of Digitization on Document Analysis

With the rise of digital technologies, businesses and institutions are increasingly digitizing physical documents, such as contracts, tax forms, and reports. Digitization not only saves space but also enables automated systems to manage, search, and retrieve data quickly. Yet, as we move from traditional filing cabinets to cloud-based storage systems, extracting meaningful information from these digitized documents becomes crucial.

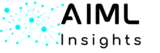

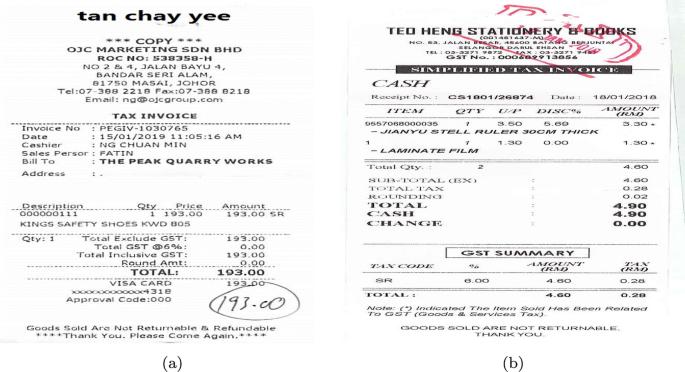

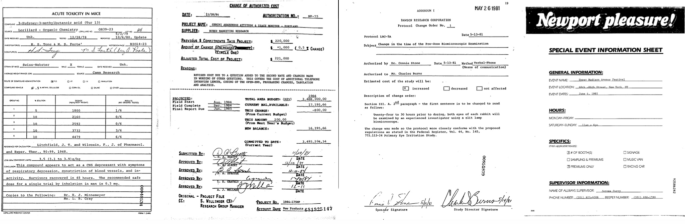

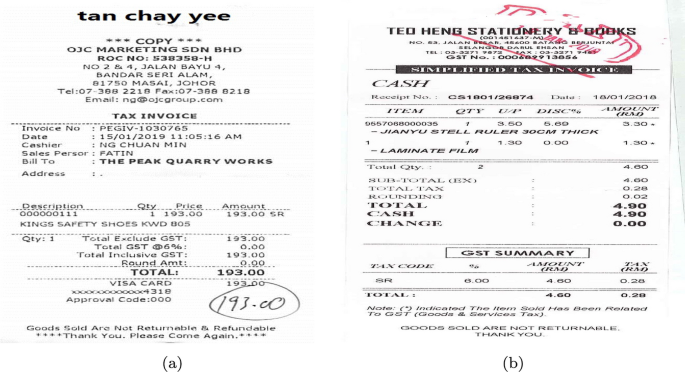

Take forms and invoices, for instance. While they may appear straightforward, they contain key information that varies significantly in structure, layout, and content. An invoice might have tables, handwritten signatures, or logos, all of which can introduce noise in the digitization process. These complexities make the form understanding process all the more challenging and essential.

Key takeaways:

- Digitization transforms physical documents into machine-readable formats, enabling streamlined data management.

- Extracting key information from scanned documents is essential for automated processes, but requires robust models to handle complex layouts and noisy inputs.

Key Challenges in Form Understanding

Form understanding in scanned documents comes with several challenges:

- Noisy Inputs: Scanned documents often contain imperfections, such as low-resolution images, shadows, or handwritten annotations, which can interfere with text recognition systems. These noise elements make it difficult for models to accurately extract information without introducing errors.

- Diverse Layouts: Forms, invoices, and receipts come in a variety of layouts, with different formatting styles. Each document may contain tables, images, and different font sizes or alignments. Traditional text extraction models may fail to understand the importance of these visual elements in structuring the data.

- Multi-Modal Data Formats: Many documents combine textual information with images, logos, and even handwritten content. To accurately interpret such data, models must effectively integrate multi-modal learning, where text and images are processed together to provide a holistic understanding of the document.

These challenges highlight the need for sophisticated machine learning models that can interpret both the content and structure of a document, making form understanding a complex but essential task in document analysis.

The Purpose of Form Understanding Models

Form understanding models are designed to overcome the challenges mentioned above by extracting critical information from forms, receipts, and invoices with high accuracy. These models use advanced algorithms to:

- Identify and interpret key fields such as names, dates, addresses, and transaction details.

- Understand the layout of documents, ensuring that important elements like tables, figures, or blocks of text are processed correctly.

- Handle noisy or distorted inputs, such as low-quality scans or handwritten notes.

The primary goal of these models is to automate the extraction of structured data, enabling organizations to digitize paper-based workflows effectively. This is particularly useful for industries that handle large volumes of forms, such as banking, insurance, and logistics. By automating form understanding, companies can reduce manual data entry, minimize errors, and improve operational efficiency.

Key takeaways:

- Form understanding models automate the extraction of key fields from complex document layouts.

- These models are essential in handling diverse layouts, multi-modal data, and noisy scanned documents.

As we explore the evolution of form understanding, it becomes evident that traditional models are no longer sufficient to handle the complexities of modern scanned documents. The next section of this article will delve into the recent advances in form understanding models, particularly focusing on the shift towards transformer-based architectures and how they have revolutionized the field.

Stay tuned to discover how these cutting-edge models, such as LayoutLM and DocFormer, are paving the way for more accurate and efficient document analysis.

Recent Advances in Form Understanding Models

As the demand for efficient document processing grows, form understanding models have evolved to tackle the complexities of scanned documents. The rise of transformer-based architectures has revolutionized the landscape, offering more precise and adaptable solutions compared to traditional methods. Let’s delve into the latest advancements in these models and how they are shaping the future of form understanding.

The Shift Towards Transformer-Based Architectures

One of the most significant developments in form understanding has been the transition to transformer-based architectures. Traditionally, Optical Character Recognition (OCR) and rule-based systems were used to extract information from documents. While effective for simpler tasks, these models struggled with complex layouts, noisy data, and the multi-modal nature of scanned documents.

Transformer models, first introduced in natural language processing (NLP), have since been adapted for document understanding. Their ability to capture long-range dependencies and handle large sequences of data makes them ideal for processing documents with varied structures. Instead of focusing solely on text, transformers can also account for layout and visual information, enabling a more holistic understanding of the document.

Key improvements brought by transformer-based models:

- Self-attention mechanisms allow the model to weigh the importance of different parts of the document, regardless of their position.

- Enhanced ability to process multi-modal data, combining textual, visual, and layout features.

- Improved accuracy in handling complex, noisy scanned documents compared to rule-based and traditional OCR methods.

This paradigm shift towards transformer models has made document processing faster, more reliable, and capable of handling the intricate structures found in modern business documents like invoices and contracts.

Overview of Notable Models

Several transformer-based models have emerged as leaders in the form understanding space, each bringing unique innovations to tackle the challenges posed by scanned documents.

LayoutLM

-

- Overview: LayoutLM is one of the pioneering models designed specifically for document understanding. It extends the BERT model by integrating textual information with spatial layout, enabling it to comprehend both the content and structure of documents.

- Key Features:

- Combines text embeddings with 2D position embeddings of text tokens on the document.

- Suitable for a range of tasks, including document image classification, form understanding, and information extraction.

- Applications: LayoutLM is highly effective in tasks where spatial information plays a critical role, such as recognizing the placement of text within tables or forms.

LayoutLMv2

-

-

- Overview: Building on the success of LayoutLM, LayoutLMv2 further enhances the integration of text, layout, and visual information. This model introduces a spatial-aware self-attention mechanism, allowing it to better understand the relationship between text and images in documents.

- Key Features:

- Adds visual features during pre-training.

- Improves attention by considering both 1D and 2D relationships between tokens, making it more effective for document layout analysis.

- Pre-training tasks include masked visual-language modeling and text-image matching.

- Performance: With its advanced architecture, LayoutLMv2 shows significant improvements in tasks such as form understanding, outperforming its predecessor.

-

DocFormer

-

-

-

- Overview: Another standout model in form understanding is DocFormer, which takes a multi-modal approach by fusing vision and language at the network layer level. This results in a more cohesive model that can interpret both text and images seamlessly.

- Key Features:

- Integrates ResNet CNN for extracting visual features and combines them with tokenized text embeddings.

- Utilizes OCR-free methods for improved accuracy in extracting information from documents with various formats.

- Impact: DocFormer has been praised for its ability to process noisy and complex document layouts, particularly in industries where document classification and key information extraction are critical.

-

-

These models represent a new era in form understanding, allowing businesses to automate the processing of complex documents with greater precision and fewer errors.

Impact of Multi-Modal Learning

A significant leap in form understanding comes from the integration of multi-modal learning, where models can simultaneously process textual, visual, and spatial data. Unlike traditional approaches that focus only on the text, multi-modal models are able to:

- Interpret visual elements like tables, graphs, and images alongside text.

- Understand layout information, making them particularly useful for documents where the arrangement of text impacts its meaning, such as forms or receipts.

- Handle noise and distortions more effectively, which is common in scanned documents.

One of the key advantages of multi-modal learning is its ability to offer contextual understanding by combining different modes of data. This leads to more accurate form understanding results and is particularly useful in scenarios where documents are scanned at low resolutions or contain a mix of handwritten and printed content.

Key takeaways

- Multi-modal models are more adept at extracting information from documents with diverse content and layouts.

- The fusion of textual, visual, and layout features helps in processing noisy scanned documents, improving accuracy and reducing the need for manual correction.

As we move forward in our exploration of advancements in form understanding, it’s crucial to highlight the role of graph-based and hybrid model approaches in overcoming challenges related to text relationships and complex structural representations. These models, which leverage both transformer and graph neural network (GNN) capabilities, are pushing the boundaries of document analysis even further.

In the next section, we’ll dive into how graph-based models and hybrid transformer architectures like Fast-StrucTexT and StructuralLM are leading the way in processing complex document layouts, while balancing model performance and computational efficiency.

Graph-Based and Hybrid Model Approaches in Form Understanding

The world of form understanding in scanned documents is continuously evolving, with graph-based and hybrid models becoming key players in tackling complex document structures. These models, particularly graph neural networks (GNNs) and innovative hybrid transformer architectures, offer sophisticated methods for analyzing the intricate relationships between text, layout, and visual elements. Let’s explore how these advancements are shaping the future of document processing technologies.

Graph-Based Models

One of the most powerful innovations in document understanding is the use of graph neural networks (GNNs). In form understanding, documents are more than just sequences of text—they are rich structures of interconnected data. Graph-based models allow for a deeper analysis of these structures by representing documents as graphs where nodes are text segments and edges represent relationships between them.

Why Graph-Based Models Matter:

- They are excellent for capturing long-range dependencies between text segments, which is essential for complex documents such as contracts, forms, and invoices.

- Graph learning enables models to understand the spatial and semantic relationships within a document, improving the extraction of key information.

- GNNs can process data more flexibly than traditional models, adapting to various layouts and structures, making them ideal for noisy or unstructured scanned documents.

Key Features of Graph-Based Models

- Graph Relationship Modeling: This allows models to capture intricate relationships between text and layout in documents. For example, in multi-page reports or heavily formatted documents, understanding how text in one area relates to another is crucial for accurate information extraction.

- Soft Adjacency Matrices: Graph models build these matrices to represent pairwise relationships between text segments. This helps in capturing context even when text elements are far apart on the page.

Graph-Based Models in Action

- PICK (Processing Key Information Extraction): This model uses a graph learning module to identify relationships between text segments. It combines text embeddings and bounding box coordinates with a graph neural network, enabling it to capture contextual dependencies and handle long-range text relationships more efficiently than transformer models alone.

Examples of Hybrid Transformer Architectures

In addition to graph neural networks, hybrid models that combine transformers with other advanced mechanisms are making waves in document understanding. These hybrid architectures enhance efficiency, enabling better performance in real-world applications.

Fast-StrucTexT

-

- Overview: Fast-StrucTexT is a hybrid transformer model designed for efficiency and accuracy in document understanding. It addresses the computational complexity often associated with transformer models while still delivering high performance on multi-modal data (text, layout, and visual features).

Key Features:

-

-

- Utilizes an hourglass transformer architecture that compresses data and expands it, allowing the model to focus on essential features while maintaining fine-grained details.

- Incorporates Symmetry Cross-Attention (SCA), a two-way attention mechanism that enhances interaction between text and visual data, resulting in more accurate form understanding.

- Application: Fast-StrucTexT is especially useful for tasks like document image classification and key information extraction where efficiency is critical, such as in mobile or real-time applications.

-

StructuralLM

-

-

- Overview: StructuralLM is another standout model that builds on the transformer-based LayoutLM but introduces cell-based position embeddings instead of word-based ones. This unique structure helps the model better capture relationships between words within specific document cells, improving its understanding of complex layouts.

-

Key Features:

-

-

-

- Groups words into cells and processes them with shared position embeddings, which allows the model to treat words within the same cell as a group. This leads to more accurate spatial understanding and better layout-based analysis.

- Includes Cell Position Classification (CPC), a task that trains the model to predict where text cells belong within a document’s structure, enhancing its ability to parse and understand forms with complex layouts.

-

-

Key Takeaways on Hybrid Architectures:

- Hybrid transformer models like Fast-StrucTexT and StructuralLM strike an effective balance between performance and computational efficiency.

- They are designed to handle real-world constraints such as resource limitations in mobile and edge computing environments.

- These models leverage multiple data modalities—text, layout, and visual elements—making them more versatile for complex document understanding tasks.

Model Efficiency and Computational Trade-offs

While graph-based and hybrid transformer models have proven to be highly effective in form understanding, they also introduce the challenge of computational complexity. Transformer models, especially when combined with graph learning or multi-modal inputs, can be resource-intensive. This raises important questions about how to balance model performance with the practical need for efficiency, particularly in applications like real-time document analysis or mobile deployment.

Balancing Performance and Efficiency:

- Resource Requirements: Transformer models, particularly those handling large-scale documents, can demand substantial computational resources, including GPU memory and processing power. In resource-constrained environments such as mobile devices, running these models in real-time can be a challenge.

- Model Compression: Techniques like model pruning and quantization are often employed to reduce the size of these models without sacrificing too much performance. This allows them to run more efficiently, enabling deployment in edge computing scenarios.

- Computational Trade-Offs: While larger models tend to offer higher accuracy, there is a diminishing return when considering the increase in computational load. Hybrid models like Fast-StrucTexT and StructuralLM offer a solution by maintaining high accuracy with a more efficient architecture.

As we explore further into datasets for form understanding, it’s essential to understand how benchmark datasets like FUNSD and RVL-CDIP contribute to the success of these models. These datasets help researchers evaluate model performance, fine-tune algorithms, and develop new methods for handling the complexities of scanned documents. In the next section, we’ll dive deeper into the key datasets used in form understanding research and discuss the challenges they present for model development.

Datasets for Form Understanding: Benchmarks for Success

In the realm of form understanding for scanned documents, the effectiveness of any machine learning model relies heavily on the quality of the datasets used for training and evaluation. Datasets serve as the foundation that allows models to develop the ability to extract key information, interpret document layouts, and handle real-world complexities. In this section, we will explore the most important datasets in form understanding research, the challenges they present, and the role they play in setting benchmarks for success.

Key Datasets in Form Understanding Research

When it comes to training models for document understanding tasks, researchers have developed several widely recognized datasets that allow for consistent benchmarking and model comparison. These datasets are designed to cover a wide range of document types, from forms and receipts to complex multi-page reports.

FUNSD (Form Understanding in Noisy Scanned Documents)

The FUNSD dataset is one of the most notable resources for form understanding. It includes scanned documents with varying levels of noise and complexity, representing the challenges that many models must overcome in real-world applications.

- Content: The dataset contains 199 forms, all of which are annotated to include relationships between different fields, such as labels and corresponding answers.

- Focus: The emphasis here is on extracting key-value pairs from forms, which is a crucial capability for models aimed at understanding invoices, registration forms, and similar documents.

- Relevance: Models trained on FUNSD must handle noisy inputs, distorted text, and diverse layouts, making it a perfect benchmark for evaluating a model’s ability to process forms under less-than-ideal conditions.

RVL-CDIP (Ryerson Vision Lab Complex Document Information Processing)

The RVL-CDIP dataset is a large-scale resource featuring a wide variety of document types, including memos, letters, forms, and advertisements.

- Size: It contains over 400,000 grayscale images categorized into 16 different document types. This makes it one of the largest and most diverse datasets available for document classification tasks.

- Applications: RVL-CDIP is frequently used to evaluate models’ performance on tasks like document image classification, which is essential for sorting through vast archives of scanned documents.

- Challenges: Due to the variety of document types and layouts, models trained on RVL-CDIP must be flexible enough to adapt to different content structures while maintaining high classification accuracy

SROIE (Scanned Receipts OCR and Information Extraction)

The SROIE dataset is specifically designed for understanding scanned receipts and extracting essential information from them, such as product names, prices, and total amounts.

- Content: It consists of 1,000 scanned receipts with annotations for key fields.

- Focus: The dataset is focused on three main tasks—text localization, optical character recognition (OCR), and key information extraction.

- Relevance: SROIE is a critical dataset for models aimed at automating tasks like receipt processing and financial document management. The real-world complexity of receipts—often featuring low-quality scans and various fonts—provides a robust test for OCR performance and information extraction accuracy.

Challenges in Dataset Utilization

While these datasets provide valuable benchmarks for form understanding models, they also introduce unique challenges, particularly when dealing with noisy and inconsistent real-world documents.

Noisy Data and OCR Errors

One of the main challenges with datasets like FUNSD and SROIE is the presence of noise—blurry text, scanning artifacts, and handwritten inputs that make optical character recognition difficult. Many documents in these datasets are scanned under poor conditions, leading to misreads and incomplete data extraction.

Key Challenges:

- OCR Performance: Models often struggle with poor-quality scans, especially when documents contain handwritten text or low-contrast fonts.

- Layout Complexity: The diverse structure of documents, from single-page receipts to multi-page forms, requires models to handle layout variability efficiently.

Layout Variability

The datasets also introduce significant variability in the layout of documents. For instance, receipts in the SROIE dataset may feature complex layouts with dense text blocks and scattered key information, while documents in the RVL-CDIP dataset could range from memos to highly structured forms.

- Dynamic Layout Understanding: Models must be able to adjust to differing document formats, whether they’re densely packed receipts or structured forms with clear fields. This requires a deep understanding of both textual content and its spatial positioning.

The Role of Standardized Benchmarks

Standardized benchmarks play a pivotal role in the development and assessment of form understanding models. These benchmarks not only allow for objective comparisons between models but also provide a structured way to measure progress in the field.

Why Benchmarks Matter

- Performance Evaluation: Datasets like RVL-CDIP and FUNSD set a baseline for evaluating the accuracy, precision, recall, and overall effectiveness of models in real-world scenarios.

- Research Consistency: Standardized datasets ensure that research results are comparable, allowing different research groups to measure their models’ success against industry standards.

- Model Development: As new models are developed, benchmarks push innovation by identifying performance gaps and offering insights into where improvements can be made.

Common Evaluation Metrics

To accurately assess the performance of models on these datasets, researchers often rely on metrics such as:

- Precision and Recall: To evaluate the correctness of the model in identifying key fields.

- F1 Score: A balanced metric that takes into account both precision and recall.

- Accuracy: Measures the overall success rate of the model in tasks like document classification or information extraction.

These metrics provide a holistic view of a model’s capability to not only recognize text but also interpret its meaning within complex, noisy layouts.

As the field of form understanding progresses, datasets will continue to evolve, offering more diverse challenges and pushing the boundaries of model performance. Looking ahead, it’s clear that future advancements will require better integration of multi-modal learning, as well as improvements in handling noisy data and complex layouts. In the final section of this article, we will explore the emerging trends and challenges that lie ahead, focusing on advancements in self-supervised learning, scalability, and the potential for hybrid approaches to further revolutionize form understanding.

Future Trends and Challenges in Form Understanding

As form understanding evolves to meet the growing demand for digital transformation, new trends and challenges are shaping the future of this field. From improving multi-modal integration to addressing the resource constraints of deploying models in real-world environments, these future developments promise to revolutionize how we process and understand scanned documents. In this final section, we explore the emerging directions in model development, the challenges in scalability, and the potential impact of hybrid approaches in form understanding.

Emerging Directions in Model Development

Form understanding models have advanced significantly with the adoption of transformer-based architectures and multi-modal learning. However, further progress is needed to keep up with increasingly complex document structures and diverse data inputs.

Better Multi-Modal Integration

The future of document processing relies on enhanced integration between text, layout, and visual data. As more models embrace multi-modal learning, the ability to combine these different types of information seamlessly will be crucial for handling real-world scenarios like receipts, forms, and invoices. Models that can fully comprehend the spatial relationships between text and layout, alongside the visual context of document elements, will have a distinct advantage over traditional single-modal approaches.

- Key advancements: Newer models like LayoutLMv3 have already started integrating more advanced attention mechanisms to improve the fusion of textual and visual modalities, offering deeper document understanding. However, future models will likely push this integration even further by incorporating additional contextual data such as user interactions or document metadata.

- Expected Impact: As multi-modal integration improves, form understanding models will become even more accurate in interpreting complex layouts, handling noisy inputs, and processing unstructured documents.

Efficient Text-Position Encoding

One of the biggest ongoing challenges is the efficient encoding of text positions in scanned documents. Models must learn to navigate the unique structure of each document while ensuring that they maintain a deep understanding of both the content and the layout.

- Current techniques: Models like DocFormer have successfully incorporated position embeddings into their architecture, allowing them to account for the spatial relationships between text elements.

- Future possibilities: Dynamic text-position encoding could take this one step further, enabling models to adjust to changes in layout structure or document format on the fly. This would be particularly useful for documents with irregular layouts or those with mixed textual and graphic content.

Challenges in Scalability and Resource Constraints

While the development of transformer-based and multi-modal models has led to impressive gains in form understanding, these advancements come with a significant computational cost. Many of these models require vast amounts of data and computing power, which makes their deployment in real-world scenarios more challenging.

Scalability Issues

As organizations look to deploy form understanding models on a large scale, the scalability of these solutions becomes a pressing concern. Models that work well in research settings often struggle when applied to high-volume workflows, where documents vary widely in structure and complexity.

- Resource-heavy models: Many of the leading models in form understanding rely on extensive training data and powerful hardware to function effectively. This limits their utility in environments with constrained computational resources.

- Solution: One potential direction for addressing scalability issues is the use of model compression techniques such as pruning, quantization, or knowledge distillation. These approaches can reduce the size and computational demands of models without sacrificing accuracy.

Deploying in Constrained Environments

In addition to scalability, the ability to deploy models in resource-constrained environments—such as mobile devices or edge computing platforms—poses a significant challenge. These environments often lack the processing power and memory needed to run large models, creating a barrier for widespread adoption.

- Potential Solutions: Techniques like lightweight model architectures, cloud-based processing, and on-device optimization will play a key role in making form understanding models more accessible in resource-limited environments.

Impact of Hybrid Approaches

As form understanding continues to evolve, hybrid approaches that combine the strengths of machine learning with traditional rule-based systems offer a promising path forward. These approaches are expected to provide greater flexibility, allowing models to adapt to new document formats and scenarios more efficiently.

Combining Machine Learning and Rule-Based Systems

Traditional rule-based systems have long been used in document processing, particularly for highly structured tasks such as invoice matching or receipt processing. While these systems are limited in their ability to handle unstructured data, they excel at dealing with repetitive tasks that follow clear, predefined patterns.

- Strength of hybrid models: By combining rule-based systems with machine learning models, organizations can develop solutions that benefit from the scalability and learning capabilities of AI, while retaining the accuracy and predictability of rules-based systems.

Real-World Applications of Hybrid Approaches

Hybrid approaches could be particularly useful for industries that handle a large number of scanned documents and require a balance between speed and accuracy. For example, financial institutions processing receipts, invoices, and contracts could benefit from models that combine pattern recognition with machine learning.

- Improved adaptability: Hybrid approaches will allow models to adapt to new document types more quickly by utilizing rule-based components for handling standardized formats and AI for managing unstructured or complex layouts.

Conclusion and Recap Understanding for Scanned Documents

The field of form understanding for scanned documents has come a long way, with advancements in transformer-based models, graph-based approaches, and multi-modal learning leading the charge. Here’s a quick recap of the sections covered:

- Introduction to Form Understanding in Scanned Documents: We explored the importance of digitization and the key challenges faced in understanding scanned forms, such as dealing with noisy inputs and complex layouts.

- Recent Advances in Form Understanding Models: A deep dive into the paradigm shift toward transformer-based architectures and the impact of multi-modal learning on improving document processing accuracy.

- Graph-Based and Hybrid Model Approaches: We discussed the rise of graph neural networks (GNNs) and hybrid models like Fast-StrucTexT, and how these innovations improve document analysis while balancing performance with computational efficiency.

- Datasets for Form Understanding: Benchmarks for Success: Key datasets such as FUNSD, RVL-CDIP, and SROIE were highlighted for their role in benchmarking models, while the challenges of dataset utilization were explored in detail.

- Future Trends and Challenges in Form Understanding: Finally, we explored emerging trends like better multi-modal integration, the challenge of scalability, and the potential of hybrid approaches to further revolutionize the field.

As the landscape of form understanding continues to evolve, it’s clear that multi-modal learning, efficient model deployment, and scalable solutions will be at the heart of future advancements. With ongoing research and development, we can expect to see more versatile models capable of handling the growing complexities of scanned documents in real-world environments.

FAQ: Understanding for Scanned Documents

Q: Why are transformer models so resource-intensive?

A: Transformer models process data in parallel across multiple attention layers, which requires substantial computational power and memory, especially for large documents or multi-modal inputs.

Q: Can graph-based models be used in mobile applications?

A: With model compression techniques, it is possible to optimize graph-based models for mobile use, though they still require more resources than simpler models.What is the biggest

Q: Challenge in utilizing noisy datasets?

A: The most significant challenge is ensuring accurate OCR performance when faced with poor scan quality, handwritten inputs, or heavily distorted documents. This can significantly impact the model’s ability to extract and interpret key information.What are the key

Q: Challenges in deploying form understanding models on mobile devices?

A: The main challenges are computational resource constraints and the lack of memory to process complex models like those used for multi-modal form understanding. Solutions include developing lighter model versions and optimizing them for edge computing.

Explore the full [Paper] for in-depth insights. All recognition and appreciation go to the brilliant researchers behind this project. If you enjoyed reading, make sure to connect with us on [Twitter, Facebook, and LinkedIn] for more insightful content. Stay updated with our latest posts and join our growing community. Your support means a lot to us, so don’t miss out on the latest updates!